We may not like to admit it, but we are all prone to subconscious bias. Our decisions are not as rational and logical as we may think they are. For trivial decisions, the consequences are not worth worrying about, but in business, government and our communities, biased decision-making can have serious implications – on how we invest, who we hire, how we design policy and how we make group decisions.

So strategies to reduce our biases – known as ‘debiasing’ – are valuable. However, many past attempts to debias our decision-making have been unsuccessful. Even Daniel Kahneman – a Nobel Laureate who has spent much of his life identifying and deconstructing subconscious bias - has struggled to reduce biases in his own decision-making, noting in his bestselling book ‘Thinking Fast and Slow’ that his “intuitive thinking is just as prone to overconfidence, extreme predictions, and the planning fallacy as it was before I made a study of these issues.”

Yet, a recent project led by Carey Morewedge, Professor of Marketing at Boston University, has shown that simple training programmes can improve decision-making, even in the long term.

The project tested two initiatives to help people identify and reduce bias in their decision-making. Participants first completed a test to measure baseline levels of six biases identified by behavioural scientists:

- Bias blind spot – the tendency to see oneself as less biased than other people, or to be able to identify more cognitive biases in others than in oneself.

- Confirmation bias - when we seek or interpret evidence in ways that support our pre-existing beliefs or hypotheses.

- Fundamental attribution error – the tendency to explain someone’s behaviour by their character and personality traits when it is more accurately explained by their circumstances and situation at that moment in time.

- Anchoring – our tendency to rely too heavily, or "anchor," on one trait or piece of information, often in the immediate context when making decisions.

- Representativeness heuristic – a mental shortcut where we rely on partial information and stereotypes to make a judgement about a person, rather than statistical fact.

- Projection bias – we assume our tastes and preferences will remain the same over time.

Next, participants were split into two groups – each learning about these six biases using different strategies:

Group 1: Instructional video

The video explained biases and decision-making shortcuts – known as heuristics - and how they can lead to suboptimal decisions. The narrator then defined specific biases, presented situations in which they occur and provided examples.

Group 2: Computer game

The second group of participants played an interactive computer game called 'Missing, the pursuit of Terry Hughes' - you can watch a clip here. The game elicited a number of biases by asking players to make decisions based on limited information. See the box below for an illustration of how this worked.

Box 1: Example of assessing confirmation bias: Players had to find their missing neighbour Terry and were primed that Terry had possibly been kidnapped. Players could then examine objects that either confirmed or disconfirmed this initial hypothesis. The game monitored which objects players focused on to determine how much they were affected by confirmation bias.

At the end of each level, players received:

- definitions and examples of relevant biases;

- personalised feedback on which biases they had exhibited to help avoid them in the next level. Feedback helped people to mark their progress and provided them with positive reinforcement;

- mitigating strategies to reduce bias such as relevant statistical rules, methods of hypothesis testing and the importance of considering alternative explanations and anchors.

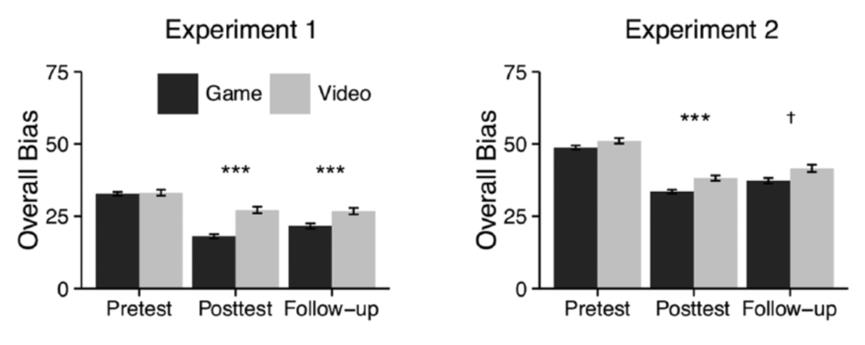

Gaming reduced bias

An immediate post-test and an 8 or 12 week follow up revealed that the computer game was most successful in reducing bias (see Figure 1). Playing the computer game reduced cognitive biases by 39% in the short term and by 29% in the long term. The instructional video reduced cognitive biases by around 20% in the short and long term. The greater efficacy of the computer game suggests that personalised feedback and application through practice are particularly important in reducing bias.

Figure 1: Results

This research was commissioned by the US Office of the National Intelligence Directorate in 2010 as project ‘Sirius' (pun on serious) to train analysts in recognising and mitigating cognitive biases. However, the programme has wider implications too since debiasing techniques are also relevant for doctors, lawyers, judges, the military, as well as for organisational decision-making such as hiring new employees.

Other organisations are also launching initiatives to eliminate bias: For instance, the start-up GapJumpers use blind auditions to help employers debias the selection process; Deloitte now removes any reference to the university or school an applicant attended; the Behavioural Insights Team have launched a digital platform called 'Applied' to reduce hiring bias; and Facebook has developed training sessions and video modules to reduce biased decision-making within the organisation and promote a positive working environment. And in September, four British Universities launched a pilot study to ‘name-blind’ their admissions process in an effort to reduce racial, gender and social bias.

These, and other moves, illustrate how companies and organisations are now recognising that implicit bias is a problem and are finding constructive measures to tackle and eliminate it.

Does this generate renewed hope that we can, after all, debias our thinking? Let’s answer this question with a cautious ‘yes’. Engaging in active learning programmes and building rigorous structures and processes to automatically remove elements which may bias our thinking show considerable promise. Whilst we will probably always suffer from bias, we may soon be able to operate in a world which is designed to help us remove it.

*Source: Morewedge, C. K. et al. (2015). Debiasing Decisions: Improved Decision Making With a Single Training Intervention. Policy Insights from the Behavioral and Brain Sciences 2015, 2(1), 129–140.

Read more from Crawford Hollingworth in our Cluhouse.